Big data is what drives most modern businesses, and big data never sleeps, what that means is that data integration and data migration need to be well-established, seamless processes. Whether data is migrating from inputs to a data lake, from one repository to another, from a data warehouse to a data mart, or in or through the cloud.

Without a competent data migration plan, businesses can run over budget, end up with overwhelming data processes, or find that their data operations are functioning below expectations.

- What is Data Migration?

- Data Migration Process

- Data Migration Definition

- Data Migration Methodology

- Data Migration Strategy

- Data Migration Plan

- Software Migration

- What is Data Migration in DBMS?

- What is Data Migration in ETL?

- Why is Data Migration Important?

- How to do Data Migration

- Types of Data Migration

- Data Migration Jobs

- Data Migration Service

- Data Migration Examples

- Data Migration Flow Diagram

- Data Migration Tools

- Data Migration Software

- System Migration

- What is Database Migration?

- Cloud Data Migration

- Salesforce Data Migration

- Free Data Migration Software

- Migration Strategy

- Data Conversion Tools

What is Data Migration?

Data migration is the process of moving data from one location to another, one format to another, or one application to another. Generally, this is the result of introducing a new system or location for the data.

Read Also: Avoid these 3 Data Migration Mistakes

The business driver is usually an application migration or consolidation in which legacy systems are replaced or augmented by new applications that will share the same dataset. These days, data migrations are often started as firms move from on-premises infrastructure and applications to cloud-based storage and applications to optimize or transform their company.

Data Migration Process

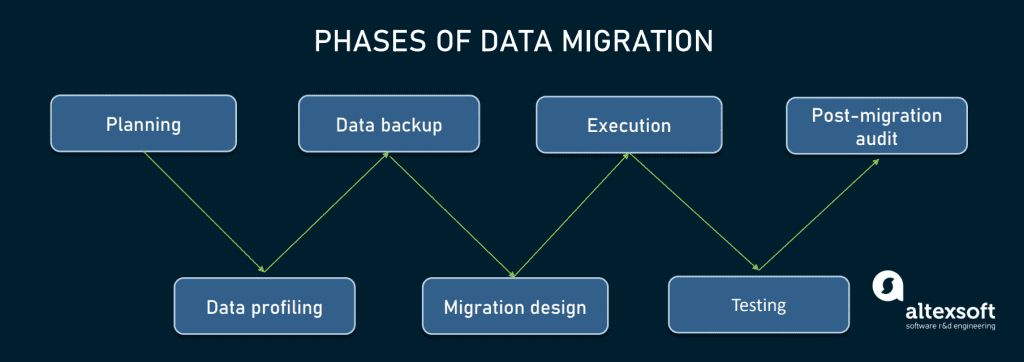

No matter the approach, the data migration project goes through the same key phases — namely

- planning,

- data auditing and profiling,

- data backup,

- migration design,

- execution,

- testing, and

- post-migration audit.

Below, we’ll outline what you should do at each phase to transfer your data to a new location without losses, extansive delays, or/and ruinous budget overrun.

Planning: create a data migration plan and stick to it

Data migration is a complex process, and it starts with the evaluation of existing data assets and careful designing of a migration plan. The planning stage can be divided into four steps.

Step 1 — refine the scope. The key goal of this step is to filter out any excess data and to define the smallest amount of information required to run the system effectively. So, you need to perform a high-level analysis of source and target systems, in consultation with data users who will be directly impacted by the upcoming changes.

Step 2 — assess source and target systems. A migration plan should include a thorough assessment of the current system’s operational requirements and how they can be adapted to the new environment.

Step 3 — set data standards. This will allow your team to spot problem areas across each phase of the migration process and avoid unexpected issues at the post-migration stage.

Step 4 — estimate budget and set realistic timelines. After the scope is refined and systems are evaluated, it’s easier to select the approach (big bang or trickle), estimate resources needed for the project, set schedules, and deadlines. According to Oracle estimations, an enterprise-scale data migration project lasts six months to two years on average.

Data auditing and profiling: employ digital tools

This stage is for examining and cleansing the full scope of data to be migrated. It aims at detecting possible conflicts, identifying data quality issues, and eliminating duplications and anomalies prior to the migration.

Auditing and profiling are tedious, time-consuming, and labor-intensive activities, so in large projects, automation tools should be employed. Among popular solutions are Open Studio for Data Quality, Data Ladder, SAS Data Quality, Informatica Data Quality, and IBM InfoSphere QualityStage, to name a few.

Data backup: protect your content before moving it

Technically, this stage is not mandatory. However, best practices of data migration dictate the creation of a full backup of the content you plan to move — before executing the actual migration. As a result, you’ll get an extra layer of protection in the event of unexpected migration failures and data losses.

Migration design: hire an ETL specialist

The migration design specifies migration and testing rules, clarifies acceptance criteria, and assigns roles and responsibilities across the migration team members.

Though several technologies can be used for data migration, extract, transform, and load (ETL) is the preferred one. It makes sense to hire an ETL developer — or a dedicated software engineer with deep expertise in ETL processes, especially if your project deals with large data volumes and complex data flow.

At this phase, ETL developers or data engineers create scripts for data transition or choose and customize third-party ETL tools. An integral part of ETL is data mapping. In the ideal scenario, it involves not only an ETL developer, but also a system analyst knowing both source and target system, and a business analyst who understands the value of data to be moved.

The duration of this stage depends mainly on the time needed to write scripts for ETL procedures or to acquire appropriate automation tools. If all required software is in place and you only have to customize it, migration design will take a few weeks. Otherwise, it may span a few months.

Execution: focus on business goals and customer satisfaction

This is when migration — or data extraction, transformation, and loading — actually happens. In the big bang scenario, it will last no more than a couple of days. Alternatively, if data is transferred in trickles, execution will take much longer but, as we mentioned before, with zero downtime and the lowest possible risk of critical failures.

If you’ve chosen a phased approach, make sure that migration activities don’t hinder usual system operations. Besides, your migration team must communicate with business units to refine when each sub-migration is to be rolled out and to which group of users.

Data migration testing: check data quality across phases

In fact, testing is not a separate phase, as it is carried out across the design, execution, and post-migration phases. If you have taken a trickle approach, you should test each portion of migrated data to fix problems in a timely manner.

Frequent testing ensures the safe transit of data elements and their high quality and congruence with requirements when entering the target infrastructure. You may learn more about the details of testing the ETL process from our dedicated article.

Post-migration audit: validate results with key clients

Before launching migrated data in production, results should be validated with key business users. This stage ensures that information has been correctly transported and logged. After a post-migration audit, the old system can be retired.

Data Migration Definition

In general terms, data migration is the transfer of the existing historical data to new storage, system, or file format. This process is not as simple as it may sound. It involves a lot of preparation and post-migration activities including planning, creating backups, quality testing, and validation of results. The migration ends only when the old system, database, or environment is shut down.

Usually, data migration comes as a part of a larger project such as

- legacy software modernization or replacement,

- the expansion of system and storage capacities,

- the introduction of an additional system working alongside the existing application,

- the shift to a centralized database to eliminate data silos and achieve interoperability,

- moving IT infrastructure to the cloud, or

- merger and acquisition (M&A) activities when IT landscapes must be consolidated into a single system.

Data Migration Methodology

The data migration process consists of phases that provide a tested methodology. You can use the data migration methodology to scope, plan, and document your migration choices and tasks.

- Discovery phase: Collect information about hosts, storage, and fabrics in the environment.

- Analysis phase: Examine the collected data, and determine the appropriate migration approach for each host or storage array.

- Planning phase: Create and test migration plans, provision destination storage, and configure migration tools.

- Execution phase: Migrate the data and perform host remediations.

- Verification phase: Validate the new system configurations and provide documentation.

Data Migration Strategy

Let’s move on to the best data migration strategies and best practices.

- Backup your data: One of the top data migration best practices is to backup your data. You can’t afford to lose even a single piece of data. Backup resources are essential to save your data from any mishaps that may occur during the process. Backing up your data is crucial to prevent any failures during the data migration process that may lead to data loss.

- Design the migration: There are two ways to design the data migration steps – big bang and trickle. Big bang involves completing the data migration in a limited timeframe, during which the servers would be down. Trickle involves completing the data migration process in stages. Designing the migration enables you to determine which is the right method for your requirements.

- Test the data migration plan: We can never stress enough about the importance of testing the strategy you plan to choose. You need to conduct live tests with real data to figure out the effectiveness of the process. This may require taking some risks as the data is crucial. To ensure that the process will be complete, you need to test every aspect of the data migration planning.

- Set up an audit system: Another top data migration strategy and best practice is to set up an audit system for the data migration process. Every stage needs to be carefully audited for errors and methodologies. Audit is important to ensure the accuracy of data migration. Without an audit system, you cannot really monitor what is going on with your data at each phase.

- Simplify with data migration tools: It is important to consider a data migration software that can simplify the process. You need to focus on the connectivity, security, scalability, and speed of the software. Data migration is challenging when the right tools are not available. Ensure that the software you use for data migration doesn’t take you a step back in the process.

Data Migration Plan

Here are 5 data migration plans that will help you turn your data transfer into success.

1. Back up your data

Sometimes things don’t go according to plan, so before you start to migrate your data from one system to another, make sure you have a data backup to avoid any potential data loss. If problems arise, for instance, your files get corrupted, go missing or are incomplete, you’ll be able to restore your data to its primary state.

2. Verify data complexity and quality

Another best practice for data migration is verifying data complexity to decide on the best approach to take. Check and assess different forms of organizational data, verify what data you’re going to migrate, where it sits, where and how it’s stored, and the format it’s going to take after the transfer.

Check how clean your current data is. Does it require any updating? It’s worth conducting a data quality assessment to detect the quality of legacy data and implement firewalls to separate good data from bad data and eliminate duplicates.

3. Agree on data standards

As soon as you know how complex the data is, you must put some standards in place. Why? To allow yourself to spot any problem areas and to ensure you can avoid unexpected issues occurring at the final stage of the project.

Furthermore, data is fluid. It changes continuously. Therefore putting standards in place will help you with data consolidation and, as a result, ensure more successful data use in the future.

4. Specify future and current business rules

You have to guarantee regulatory compliance, which means defining current and future business rules for your data migration process. They must be in line with various validation and business rules to enable you to transfer data consistently, and this can only be done through establishing data migration policies.

You might have to develop a set of rules for your data before migration and then re-evaluate these rules and increase their complexity for your data after the migration process ends.

5. Create a data migration strategy and plan

The next step to successful data migration agrees on the strategy. There are two approaches you can take: “big bang” migration or “trickle” migration.

If you select the big bang migration, the entire data transfer is completed within a specific timeframe, for example, in 24 hours. Live systems are down while data goes through ETL processing and is moved into a new database. It’s quicker but riskier.

The trickle migration splits data migration into stages. Both systems – the new one and the old one run concurrently. This means there is no downtime. While this approach is more complex, it’s safer, as data is migrated continuously.

Software Migration

Software migration is the practice of transferring data, accounts, and functionality from one operating environment to another. It could also refer to times when users are migrating the same software from one piece of computer hardware to another, or changing both software and hardware simultaneously.

Software migration is a generic term that can refer to either a sort of transfer for applications, operating systems, databases, networks, content management systems (CMS), or even an entire IT infrastructure.

Migrating from one piece of software to another can be hard enough for an individual. For a large team or an entire company, it’s an IT nightmare. Because so many things can go wrong in such a long process, it’s important to plan out and follow any software migration carefully.

What is Data Migration in DBMS?

Databases are data storage media where data is structured in an organized way. Databases are managed through database management systems (DBMS).

Hence, database migration involves moving from one DBMS to another or upgrading from the current version of a DBMS to the latest version of the same DBMS. The former is more challenging especially if the source system and the target system use different data structures.

As the DBMS vendors releases new versions frequently, some are minor and some major. Minor upgrades are quite easy for DBA’s to apply as they have to just run some patches. But major updates require a lot of work and many DBA’s tend to postpone applying major updates as much as they can afford. But once DBMS Vendor stops supporting the current version it becomes inevitable to upgrade to the latest version.

What is Data Migration in ETL?

ETL represents Extract, Transform and Load, which is a cycle used to gather data from different sources, change the data relying upon business rules/needs and burden the information into an objective data set. Data migration is the way toward moving information starting with one framework then onto the next.

While this may appear to be clear, it includes an adjustment away and data set or application. With regards to the concentrate/change/load (ETL) measure, any data migration will include in any event the change and burden steps.

What is the difference between data migration and ETL?

Data migration and ETL are fairly comparable in that they include moving data starting with one source then onto the next. In any case, data migration doesn’t include changing the arrangement, while ETL does (that is the reason there is “extracted” in its name).

As referenced before, ETL and data integration are both utilized when associations need to get more out of the data they have. Be that as it may, data integration doesn’t include changing data, all things considered.

Why is Data Migration Important?

No matter what industry you work in, there’s a high chance that your company will need to transfer large sets of data from one platform to another at some point. Known as data migration, this process can help your company store a larger amount of data at a time, maintain the integrity of said data, and boost productivity.

When it comes to strategizing data migration, you will probably need to hire some external help. Some companies might be tempted to handle it by themselves, but this can lead to decreased efficiency and increased costs down the road. If your company is preparing to migrate large sets of data, it’s imperative that you research best practices for successfully completing the process.

Data migration offers a number of benefits to companies. Businesses who successfully migrated their data enjoy benefits such as:

- A boost in productivity and efficiency

- Upgraded applications and services

- Reduced storage costs

- Improved ability to scale resources

- A decrease in unnecessary interruptions

How to do Data Migration

Below we have listed all of the essential steps for pulling off a successful data migration. There are typically seven steps involved in migrating data:

- Identifying the format of the data you’re planning to transfer

- Review the size of your project to determine what resources it will require and how much you need to budget for

- Backup data in case an issue occurs during the migration process

- Ensure you are using the right software for your data migration

- Upload the data to start the migration process

- To make sure the data has been successfully transferred, perform some final testing on the target system

- Invest in follow-up maintenance to make sure the data’s integrity has been maintained

Having a reliable team of experienced employees and consultants can help you ensure this process goes smoothly. As you move through each step, make sure you are following all of the necessary procedures involved in the migration.

Types of Data Migration

There are four different types of data migration a company might perform:

- Database Migration

- Application Migration

- Storage Migration

- Business Process Migration

Database Migration

Database migration is a common process for companies to undergo. Because companies rely so much on databases nowadays, they might end up changing vendors, upgrading their software, or even moving their data to a cloud-based platform at some point.

Application Migration

Application migrations are necessary when a company switches platforms or hires a new vendor. Your company might switch to a new HR database or project management software. When this happens, they will need to ensure the data can successfully be transferred between both the new application and the existing one.

Storage Migration

Storage migration involves the transfer of data from a disk to the cloud. Companies are starting to transfer their data to the cloud for a number of reasons such as security, cost, and accessibility.

Business Process Migration

Companies need to perform a business process migration when transferring applications and databases that contain information about their customers, services, and operations. When a company merges with another one or wants to reorganize its structure, it will need to go through a business process migration.

Data Migration Jobs

The process of migrating data from an old application to a new one—or to an entirely different platform—is handled by a team of data migration specialists. Data migration specialists plan, implement, and manage varying forms of data for organizations —particularly streams moving between disparate systems.

Data migration professionals typically manage the following responsibilities:

- Meet with clients or management to understand data migration requirements and needs

- Strategize and plan an entire project, including moving the data and converting content as necessary, while considering risks and potential impacts

- Audit existing data systems and deployments and identify errors or areas for improvement

- Cleanse or translate data so that it can be effectively moved between systems, apps, or software

- Oversee the direct migration of data, which may require minor adjustments

- Test the new system after the migration process as well as the resulting data to find errors and/or points of corruption

- Document everything from the strategies used to the exact migration processes put in place—including documenting any fixes or adjustments made

- Develop and propose data migration best practices for all current and future projects

- Ensure compliance with regulatory requirements and guidelines for all migrated data

Essentially, data migration specialists move data from one place to another, generally within the same organization.

Top Data Migration Employers/Organizations

To understand where one would be working and with what organizations one might be involved, it makes sense to explore some of the most popular employers in the industry:

- EClinicalWorks

- Capgemini

- Deloitte

- Thomson Reuters

- First Tek

- Cognizant

- Globanet Consulting Services

- C-Solutions Inc

- Scrollmotion Inc

- Ecolob

- Accenture

- DXC Technology

Data Migration Service

When using AWS Database Migration Service for example, you connect the service to your source database. The service then reads the data, formats it to match the requirements of your target database, and transfers the data according to the migration task you have defined.

These processes occur primarily in-memory for greater performance. However, large transfers, logs, and cached transactions require disk storage or buffering.

Once replication from source to target begins, DMS begins monitoring your database for changes. This is done with Change Data Capture processes, known as AWS DMS CDC. CDC ensures that your data remains consistent between databases during the transfer process. It does this by caching changes made during migration and processing the changes once the primary migration task is over.

After the initial transfer is complete, Database Migration Service works through any cached items, applying changes to the target as needed. The service continues to monitor for and cache changes during this time and until you transfer your workloads and shut down your source database.

Data Migration Examples

Here’s an example of migrating a legacy application that uses Microsoft SQL Server. This instance of SQL Server has AlwaysOn availability groups (AG) feature and is hosted between two hosts, with locally attached disks. Daily backups are stored on a file system and retained for two weeks; weekly backups are stored in the local object store for long-term retention.

To help architect the migration of this application to Oracle Cloud Infrastructure, let’s identify application components and answer some of the questions that were discussed earlier. The following diagram describes the application as it resides within the current on-premises environment.

A successful move of the application to Oracle Cloud Infrastructure must move both the structured database files and the archive backup data. This is an opportunity to trim the amount of data and purge your archive to meet the data requirements of your business.

Now consider what part of data must be accessed across geographies or availability domains. The current state of the application structure is shown in high availability in a single region. Focusing on the migration of data, availability domains create the redundancy necessary for the application to fail over in a reasonable timeframe.

To reduce latency and ensure performance, keep the block volume within the same fault domain as the SQL Server host.

The data must be highly available, but how much data can actually be lost before the loss starts to significantly impact business operations? SQL Server availability groups manage data loss between the individual databases. This consideration also affects the backup policies for the application. In this example, the backups are occurring on the secondary server, so they don’t impact the performance of the primary server.

How many copies of the data is needed to ensure both high availability and business continuance with the application? The organization of the AlwaysOn availability groups within fault domains and availability zones is key when building the new home for the application in Oracle Cloud Infrastructure.

Because the object storage is redundant across the region, the decision to replicate the backup data to another region must be part of the business operations model.

Using the Oracle Cloud Infrastructure Object Storage service to replace an offsite tape solution can be accomplished with cross-region replication. Cross-region replication for the backup data and object storage ensures that the data is protected from region-wide outages that might affect the business.

Data Migration Flow Diagram

ShareGate Desktop is a client-side only application. The app will use the available SharePoint APIs (CSOM, REST, GRAPH, SOAP, etc.) for your migrations, and will not impact the database directly.

- Requests are sent to the APIs to get information from the source.

- SharePoint sends calls to the Active Directory through the people picker to resolve your user’s permissions and metadata values, like created by and modified by.

- SharePoint returns the data from the calls sent in point (1) to ShareGate Desktop.

- Requests are sent to build the structure at the destination.

- In Normal Mode, list items and documents are sent directly to the tenant. Some requests are sent to get information on the destination.

- ShareGate Desktop receives information for some of the requests from point (4).

- When Migrating using Insane Mode, the app will use the SharePoint online migration API. Your List items and documents are packaged and sent to Azure Storage with a manifest package. Some requests are sent to Azure to get a status update for the import to Microsoft 365 process at point (7).

- Microsoft 365 imports the packages from the Azure Storage.

- Azure returns status updates about the packages being imported to Microsoft 365.

Data Migration Tools

There are three primary types of data migration tools to consider when migrating your data:

- On-premise tools. Designed to migrate data within the network of a large or medium Enterprise installation.

- Open Source tools. Community-supported and developed data migration tools that can be free or very low cost.

- Cloud-based tools. Designed to move data to the cloud from various sources and streams, including on-premise and cloud-based data stores, applications, services, etc.

Let’s now look at the popular ones.

1. Talend

Availability: Open source

Talend open studio is an open architecture product that provides unmatched flexibility to users to solve migration and integration challenges in a better way easily. It is quite easy to adopt for data integration, big data, application integration, etc.

Key features:

- It simplifies ETL processes for large and multiple data sets.

- Maintains the precision and integrity of data throughout the migration.

2. Pentaho

By tightly coupling data integration with business analytics, the Pentaho platform from Hitachi Vantara brings together IT and business users to ingest, prepare, blend and analyze all data that impacts business results. Pentahos open source heritage drives continued innovation in a modern, unified, flexible analytics platform that helps organizations accelerate their analytics data pipelines.

Pentaho Features:

- Ad hoc Reporting

- Benchmarking

- Content Management

- Customizable Dashboard

- Dashboard

- Data Analysis Tools

- Data Import/Export

- Data Quality Control

- Data Visualization

- Drag & Drop

- Job Scheduling

- Key Performance Indicators

- Match & Merge

- OLAP

- Performance Metrics

3. Informatica

Informatica is a Software development company, which offers data integration products. It offers products for ETL, data masking, data Quality, data replica, data virtualization, master data management, etc. Informatica Powercenter ETL/Data Integration tool is the most widely used tool and in the common term when we say Informatica, it refers to the Informatica PowerCenter tool for ETL.

Informatica Powercenter is used for Data integration. It offers the capability to connect & fetch data from different heterogeneous source and processing of data.

For example, you can connect to an SQL Server Database and Oracle Database both and can integrate the data into a third system.

4. SnapLogic

SnapLogic is a software company that offers cloud integration products to allow customers to connect cloud-based data and applications with on-premise and cloud-based business systems. The products are designed to allow even business users with limited technology skills to access and integrate data from different sources.

The SnapLogic Integration Cloud includes:

- Snaplex: A self-upgrading execution network that streams data between applications, databases, files, social and big data sources. When running in the cloud, the Snaplex is able to scale up and down elastically based on the volume of data being processed or the latency requirements of the integration flow. The Snaplex can be configured to run on-premises while integrating on-premise systems.

- SnapLogic Designer: An HTML5-based product for the creation of integration workflows (called pipelines), which are sequences of Snaps connected to serve a purpose. Snaps can be connected through a visual drag-and-drop interface with no coding required.

- SnapLogic Manager:An interface for the management of projects, accounts and connections, providing cluster management, job scheduling, failover, notification and alerting.

- SnapLogic Monitoring Dashboard: An interface for local or remote monitoring of the performance of integration workloads. The Monitoring Dashboard is available in a browser and on mobile devices such as the iPad, allowing for complete remote visibility into real-time and scheduled bulk integrations.

- SnapStore: An online marketplace where a collection of downloadable Snaps have been made available by SnapLogic and its partners. Independent software vendors (ISV) can sell their own Snaps through the SnapStore.

5. Apache NIFI

Apache NiFi is a powerful, easy to use and reliable system to process and distribute data between disparate systems. It is based on Niagara Files technology developed by NSA and then after 8 years donated to Apache Software foundation. It is distributed under Apache License Version 2.0, January 2004. The latest version for Apache NiFi is 1.7.1.

Apache NiFi is a real time data ingestion platform, which can transfer and manage data transfer between different sources and destination systems. It supports a wide variety of data formats like logs, geo location data, social feeds, etc.

It also supports many protocols like SFTP, HDFS, and KAFKA, etc. This support to wide variety of data sources and protocols making this platform popular in many IT organizations.

The general features of Apache NiFi are as follows −

- Apache NiFi provides a web-based user interface, which provides seamless experience between design, control, feedback, and monitoring.

- It is highly configurable. This helps users with guaranteed delivery, low latency, high throughput, dynamic prioritization, back pressure and modify flows on runtime.

- It also provides data provenance module to track and monitor data from the start to the end of the flow.

- Developers can create their own custom processors and reporting tasks according to their needs.

- NiFi also provides support to secure protocols like SSL, HTTPS, SSH and other encryptions.

- It also supports user and role management and also can be configured with LDAP for authorization.

6. IBM Infosphere DataStage

IBM® InfoSphere DataStage is a data integration tool for designing, developing, and running jobs that move and transform data.

InfoSphere DataStage is the data integration component of IBM InfoSphere Information Server. It provides a graphical framework for developing the jobs that move data from source systems to target systems.

The transformed data can be delivered to data warehouses, data marts, and operational data stores, real-time web services and messaging systems, and other enterprise applications. InfoSphere

DataStage supports extract, transform, and load (ETL) and extract, load, and transform (ELT) patterns. InfoSphere DataStage uses parallel processing and enterprise connectivity to provide a truly scalable platform. With InfoSphere DataStage, your company can accomplish these goals:

- Design data flows that extract information from multiple source systems, transform the data as required, and deliver the data to target databases or applications.

- Connect directly to enterprise applications as sources or targets to ensure that the data is relevant, complete, and accurate.

- Reduce development time and improve the consistency of design and deployment by using prebuilt functions.

- Minimize the project delivery cycle by working with a common set of tools across InfoSphere Information Server.

- Job lifecycle

You can develop, test, deploy, and run jobs that move data from source systems to target systems by using IBM InfoSphere DataStage. - Job designs

Design jobs that extract data from various sources, transform it, and deliver it to target systems in the required formats. - InfoSphere DataStage integration in InfoSphere Information Server

You can use the capabilities of other IBM InfoSphere Information Server components as you develop and deploy IBM InfoSphere DataStage jobs.

Data Migration Software

Now that you understand what database migration is and how it can help to get your data from old computer to new, let’s explore the following software, which makes it easy.

1. PCTrans

If you plan to move your data, applications and accounts between an old and a new system, then PCTrans by EaseUS is the right tool.

Once you are done with the migrating process, all your applications would be pre-installed in the new system. The software will perform the work efficiently.

Some of the helpful features of EaseUS are automatic file transfer and 24/7 transfer guidance. These features turn out to be pretty helpful when you are stuck at any place while migrating your data. Other than that, its integration with tools such as Dropbox, Auto CAD, Adobe, and MS Office provides you access to several other features.

2. Acronis

Whether you are planning on moving your files to an upgraded system or creating a backup, Acronis True Image has it all covered.

Acronis is like a one-stop solution for all your computer data needs. You can migrate, clone, and protect all the data and files of your Windows or Mac. You can backup all the files, including the programs, photos, boot information, and operating system information.

There is no need to install multiple tools as Acronis comes with a built-in anti-malware tool for protecting all your data. So, you get complete protection from all types of threats such as cyberattacks, viruses, disk failure, and much more.

The most amazing feature of Acronis is the Active Disk Learning feature. It allows you to create a complete replica of your system even if it is in use. So, there is no need to stop your work and reboot the system while migrating the data from your old system to the new one.

It also offers simple restore options by retaining up to 20 versions of a single file for a span of 6 months. You can also back up an entire disk image to format, copy, delete, or even partition the image of that particular hard drive.

3. Laplink

Now, you can migrate every single file, application, folder, and even your settings with the Laplink PCmover.

As most of the programs would be pre-installed in the new system, you won’t have to search for the license codes. Another important thing is that even if your old system’s version is different, you will still be able to restore and transfer all the data without leaving behind any of it.

The steps of the migration process are:

- Firstly, install the Laplink PCmover professional tool on both your new and old systems.

- Select the transfer option that you wish to move ahead with.

- Now, sit back and relax! Your new system will have all the settings and programs just like the old one.

Laplink offers a chance to select the files and applications you wish to restore or transfer to your new system. Although, you won’t be able to transfer any anti-virus tools. You will have to deactivate it in the old system for your anti-virus and then restart it in the new one.

4. AOMEI

AOMEI has come up with professional partition software to manage all the partitions of your disk. If you have purchased a new system and planning on migrating all your applications and data, you can do it with ease by using this tool. You can clone specific partitions or even clone the entire hard drive to the new system from the old one.

As it is professional partition software, you get complete control of your dynamic disk partitions. Whether you wish to create, format, delete, merge, split, move, clone, align, or any other thing with partitions, you can get it done with a few clicks.

You can even perform all the partitioning operations through the Command Prompt. Its Quick Partitioning feature allows you to partition your drive with one click quickly.

The features are not only restricted to partitioning, but you can also find several converters and wizards for better functioning.

Another fascinating feature offered by this tool is the Windows To Go Creator. You can easily and quickly build a bootable and functional Windows 10/8/7 system on your USB drive. Once you get your hands on the software, you will find several features as you move ahead and explore them.

AOMEI works on Windows.

System Migration

A system migration is the process of transferring business process IT resources to a newer hardware infrastructure or a different software platform for the purpose of keeping up with current technologies and/or to gain better business value. In all cases, the move is done toward a system perceived to be better than the current system and would, in the long run, give better value.

A system migration might involve physical migration of computing assets, like when older hardware can no longer provide the required level of performance and meet the business needs of the organization.

Sometimes only data and applications need to be migrated to a new system or platform, which may reside on the same hardware infrastructure, but the reason behind it would still be the same: because the new system is perceived to be better than the old one.

When the migration only involves data and software, the move can be automated using migration software. Especially with the rising popularity of cloud computing, many business are migrating their systems into the cloud, which is usually done using automated tools in quite a short period.

The main drivers of system migration include:

- The current system no longer performs as expected.

- A new technology which drives processes faster becomes available.

- The old system becomes deprecated and support is no longer available for it.

- The company is taking a change in direction.

What is Database Migration?

Database migration — in the context of enterprise applications — means moving your data from one platform to another. There are many reasons you might want to move to a different platform. For example, a company might decide to save money by moving to a cloud-based database.

Or, a company might find that some particular database software has features that are critical for their business needs. Or, the legacy systems are simply outdated.

The process of database migration can involve multiple phases and iterations — including assessing the current databases and future needs of the company, migrating the schema, and normalizing and moving the data. Plus, testing, testing, and more testing.

Database migration is a multiphase process that involves some or all the following steps:

Assessment. At this stage, you’ll need to gather business requirements, assess the costs and benefits, and perform data profiling. Data profiling is a process by which you get to know your existing data and database schema. You’ll also need to plan how you will move the data — will you use an ETL (Extraction, Transformation, and Loading) tool, scripting, or some other tool to move the data?

Database schema conversion. The schema is a blueprint of how the database is structured, and it varies based on the rules of a given database. When you move data from one system to another, you’ll need to convert the schemas so that the structure of the data works with the new database.

Data migration. After you have completed all the preliminary requirements, you’ll need to actually move the data. This may involve scripting, using an ETL tool or some other tool to move the data. During the migration, you will likely transform the data, normalize data types, and check for errors.

Testing and tuning. Once you’ve moved the data, you need to verify that the data: was moved correctly, is complete, isn’t missing values, doesn’t contain null values, and is valid.

Cloud Data Migration

Cloud migration is the process of moving data, applications or other business elements to a cloud computing environment.

There are various types of cloud migrations an enterprise can perform. One common model is the transfer of data and applications from a local, on-premises data center to the public cloud. However, a cloud migration could also entail moving data and applications from one cloud platform or provider to another — a model known as cloud-to-cloud migration.

A third type of migration is a reverse cloud migration, cloud repatriation or cloud exit, where data or applications are moved off of the cloud and back to a local data center.

The general goal or benefit of any cloud migration is to host applications and data in the most effective IT environment possible, based on factors such as cost, performance and security.

For example, many organizations perform the migration of on-premises applications and data from their local data center to public cloud infrastructure to take advantage of benefits such as greater elasticity, self-service provisioning, redundancy and a flexible, pay-per-use model.

Salesforce Data Migration

Salesforce data migration is defined as the process of moving Salesforce data to other platforms where required. The migration is an opportunity to cleanse the data and the data should demonstrate the following characteristics:

- Complete- All necessary details should be contained for all users

- Relevant- What the information needs should be included

- Accuracy- Details contained should be accurate

- Timeliness- Data should be available when you need it

- Accessibility- Data should be accessible whenever we need it

- Validity- Correct format required

- Reliability- Data should contain authentic information

- Uniqueness- No record duplicates should exist

To achieve these standards, the data often needs to be in a single repository where it becomes easy to update, validate and back up the data. Therefore, it makes sense to perform Salesforce migration to create a single repository of data.

There are a number of ways by which Salesforce data can be migrated but the process requires many skills across a range of disciplines. Knowledge of Salesforce database management systems and connecting technologies is also a must. The process requires patience and proper care to migrate heavy amounts of Salesforce data.

Free Data Migration Software

1. CloverDX

CloverDX is a data integration and data management tool that can help you design, automate, operate, and publish data.

The tool functions in three ways:

- Data Warehouse – Customer onboarding, data transformation, and data validation.

- Data Migration – Complex migration projects, migrating messy data, and migrating data on a deadline.

- Data Ingestion – Customer onboarding, data transformation, and data validation.

Small to medium-sized enterprises rely on CloverDX to design, automate, and operate large amounts of data. The company also claims to provide a rapid data processing interface.

This means that along with smooth raw data processing, CLoverDX also aids in end-to-end processing. Its flexibility, lightweight footprints, and fast processing speed also make CloverDX suitable for handle large amounts of data.

Plus, you can easily design and orchestrate the workloads in the right sequence and monitor productivity. It helps you maintain a constant workflow, either on-premise or cloud.

2. O&O DiskImage

O&O DiskImage is a data migration tool that helps you back up an entire computer or individual files – even while the system is still in use. It also helps you clone, protect, recover, and store all your essential data. You can restore your data even if you’re unable to start your Windows system.

Carrying a CD or USB as a backup device is a thing of the past. Cloning the data using O&O DiskImage helps you to access it easily from anywhere and anytime. It also provides an extra layer of security and portability options to access data in case of any system damage.

The migration tool helps you create VHDs directly, supports automatic backups, restore the backup stored, and more.

3. IBM InfoSphere Data Replication

IBM InfoSphere Data Replication is a data integration and replication platform that helps you migrate, cleanse, moderate, or transform your data across any platform. The on-premise tool lets you access and integrate data from all platforms and thereby enhance your business.

It comes with massively parallel processing (MPP) capabilities suitable for handling all data volumes.

Aside from data migration, it also helps with data governance, maintaining data quality, deliver information to critical business initiatives, etc. Other functions include application consolidation, dynamic warehousing, master data management (MDM), service-oriented architecture (SOA), and more.

It also works as a centralized data monitoring platform that performs deployment and data integration processes using the simple GUI.

4. Macrium Reflect 7

Macrium Reflect 7 is an image-based data migration software that provides backup, disk imaging, and cloning solutions. The tool builds an exact replica of a hard disk or the partitions you can use during the disk restoration. If you face any kind of system failure, you can easily get back your files and folders.

The migration system supports local, network, and USB drives and is suitable for both home and business use.

5. Laplink PCmover

Laplink PCmover is a data migration tool that allows you to migrate every file, folder, application, and even settings to your new system. Since most programs are pre-installed and compatible with the new system, there’s no need for license codes or old CDs.

It also restores and transfers data without leaving behind any data, even when the target system is of a different version.

The migration process is quick and takes three steps, including:

- Install PCmover Professional on both your old and new system.

- Choose the transfer option from the dashboard.

- And it’s done! Your new system now has all of the similar settings and programs as your previous system.

Migration Strategy

Often than not, many companies are afraid to approach database migration because of the underlying challenges that include data security, lack of collaboration, absence of integrated process, and lack of competency to analyze the flow. In such a case, it is important to follow the best practices for database migration to have the utmost attention to details.

Below are the best strategies for database migration that you can follow:

Always back up prior to execution

Let’s take a simple example. When you are transferring the data from your old mobile phone to the new mobile phone, you first take the back up and then proceed with the execution.

On similar lines, it is safe to ensure that you always back up your data before you proceed with the migration. Also, when you backup your data, it should be stored in the tested backup storage system.

Preparing the old database

You do not wish to carry the redundant data to the new database infrastructure. In such a case, it is a good idea to take some time with the old database and sort the relevant data. If you find any junk, you can straightway delete the data.

You can clean up the mess regarding the old database, and you can carry only the required data to the new database. This way, you can transfer only the useful data to save your time and efforts.

Seek advice and assistance from the experts

It is no surprise that experts can do more good for your database than any other service providers. An expert database service provider can help in the creation as well as the execution of the database migration process.

Experts can help you decide whether you can opt for the database migration in Laravel or database migration in CodeIgniter.

They have performed the steps of the migration process multiple times for different sizes of companies. As a result, they can understand your database migration need in a better manner.

Prioritizing the data

As discussed earlier, there are two types of method used for database migration—in parts and as a whole. Generally, the phase-based database migration is considered a secure method, owing to the testing carried out at various levels.

Read Also: How to Prevent Fraud Against your Small Business

On similar lines, when you opt for database migration, it is pertinent to set a rank or priority for the data that you are transferring. This way, you can transfer the priority data in the first load and then move ahead with the low-risk data.

Data Conversion Tools

Complex transformations, field-to-field mapping, data profiling, and other steps can be simplified by using data conversion software. Broadly, these tools are divided into three types:

Scripting Tools: This is a manual method that uses Python or SQL scripts to extract, transform, and load data.

On-premise ETL Tools: Hosted on the company’s server and native computing infrastructure, these tools automate the on-going process of standardizing data and eliminate the need to write codes. The organization needs a license or purchase a copy from the software vendor to use these data conversion tools.

Cloud-based ETL Tools: These tools allow the organization to leverage the infrastructure and expertise of the software vendor via the cloud.

Conclusion

As you go through the process of data migration services, understanding how the process works is an essential step. Most data is migrated when there is a system upgrade. However, it involves a lot of challenges that can be solved easily by following the best practices.

We learned the different data migration strategies that can enhance the performance of the migration process. Once the data is lost, recovering it is more of a hassle than migrating it.